Past projects

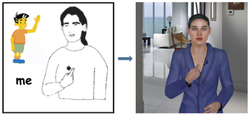

avatars translate american sign language

3D Signing Virtual Agents: in this project funded by the National Science Foundation (described further here), we design 3D Signing Virtual Agents who can dynamically sign in American Sign Language (ASL).We partner with the Institute for Disabilities Research and Training (IDRT) to generate real-time animations of 3D characters during real-time motion capture of human signers ASL gestures and facial expressions.

Emotion Recognition from Physiological Signals

Capture of physiological signals

Capture of physiological signals

In this project (described here), we simulate driving scenes and scenarios in a 3D virtual reality immersive environment to sense and interpret drivers’ affective states (e.g. anger, fear, boredom).

Drivers wear non invasive bio-sensors while operating vehicles with haptic feedback (e.g. break failure, shaking with flat tire) and experience emotionally loaded 3D interactive driving events (e.g. driving in frustrating delayed New-York traffic, arriving at full speed in a blocked accident scene with failed breaks and pedestrian crossing).

Social robots

Social service robot: Cherry, the Little Red Robot with a Personality (described here), was one of the first fully integrated mobile robotic systems to test human-robot interaction in social contexts.We built Cherry to provide guided tours of our Computer Science suite to visitors. Cherry had a map of the office floor, she could recognize faculty with machine vision and talk to visitors about each faculty’s research. She would also get frustrated – and showed it – if she kept trying to find a professor whose door would always be closed…

We evaluated the reactions of people before they met Cherry and after they met her: the more people interacted with her, the more they liked her. When the project ended, many people told us they missed her roaming around our Computer Science floor, and asked if we could bring her back.

Cooperating mobile robots with emotion-based architecture: We won the Nils Nilsson Award for Integrating AI Technologies and the Technical Innovation Award when we competed at the AAAI Robot Competition: Hors D’oeuvre Anyone at the National Artificial Intelligence Conference in 2000 organized by the Association for the Advancement of Artificial Intelligence (AAAI).We were the first team to introduce a pair of collaborating robots (described further here) – Butler (taller, shown on the left) and its assistant (right): Butler moved around the crowd offering hors d’oeuvres on its tray; a laser sensor enabled it to know when a treat was taken, hence to determine when the tray was getting low on food and needed refilling. Sonars enabled them to avoid obstacles such as guests… The assistant stood by the hors d’oeuvres refill station until called by Butler to bring a new full tray for tray exchange. Moreover, they were designed with an emotion-based three-layer architecture which simulated some of the roles of emotions during human decision-making: e.g. if the assistant was too slow, Butler’s frustration would increase (and be expressed) with time, until it decided to get the tray itself. If you’re wondering about the armadillo theme, the competition was in Texas…